RST-LoRA: A Discourse-Aware Low-Rank Adaptation for Long Document Abstractive Summarization

📄 Abstract

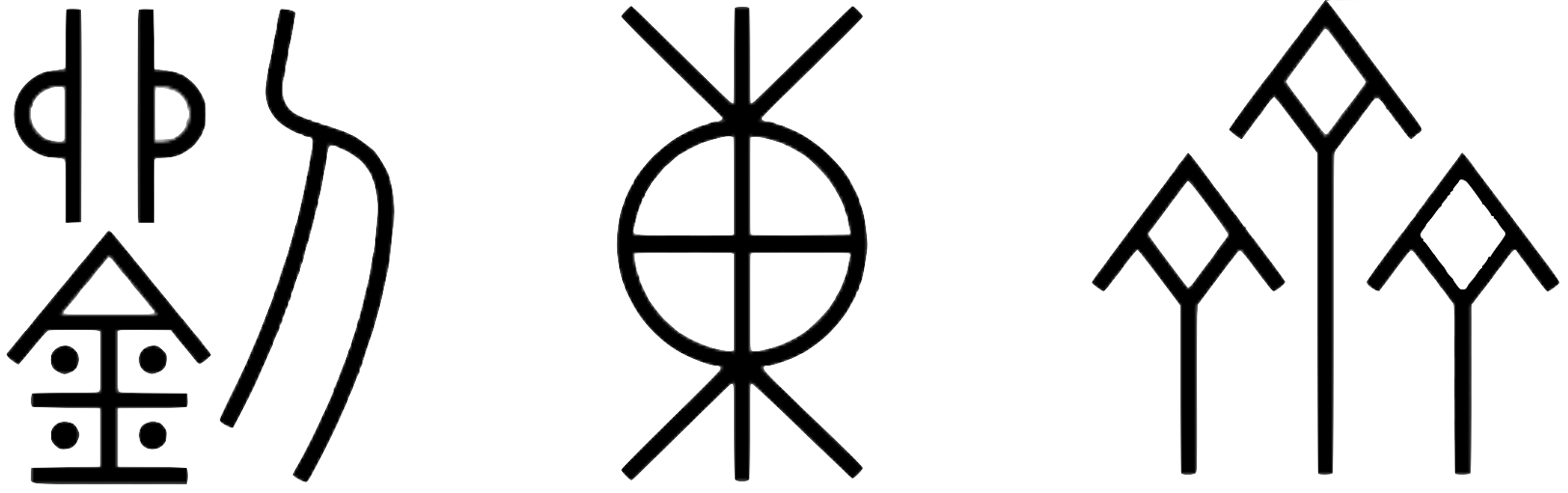

For long document summarization, discourse structure is important to discern the key content of the text and the differences in importance level between sentences. Unfortunately, the integration of rhetorical structure theory (RST) into parameter-efficient fine-tuning strategies for long document summarization remains unexplored. Therefore, this paper introduces RST-LoRA and proposes four RST-aware variants to explicitly incorporate RST into the LoRA model. Our empirical evaluation demonstrates that incorporating the type and uncertainty of rhetorical relations can complementarily enhance the performance of LoRA in summarization tasks. Furthermore, the best-performing variant we introduced outperforms the vanilla LoRA and full-parameter fine-tuning models, as confirmed by multiple automatic and human evaluations, and even surpasses previous state-of-the-art methods.

🔍 Overview

💻 Code

Our code is publicly available on GitHub:

📚 Citation

@inproceedings{pu-demberg-2024-rst, title = "{RST}-{L}o{RA}: A Discourse-Aware Low-Rank Adaptation for Long Document Abstractive Summarization", author = "Liu, Dongqi and Demberg, Vera", editor = "Duh, Kevin and Gomez, Helena and Bethard, Steven", booktitle = "Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers)", month = jun, year = "2024", address = "Mexico City, Mexico", publisher = "Association for Computational Linguistics", url = "https://aclanthology.org/2024.naacl-long.121", doi = "10.18653/v1/2024.naacl-long.121", pages = "2200--2220", }